Learning Music Theory The Programmers Way

I have an amazing girlfriend, who plays music beyond a level I'll ever achieve. And thats fine. I've also held an interest in playing and making music since I was a child, but never with the real drive or opportunity to pursue those interests. At one point in my life, I played basic piano, violin, cello, bass.. if it had a string, I could play it, except guitar for some ungodly reason?

She has revitalized my love for music. My drive to understand it, lisen to it thoroughly and truly. With a lot of my playlist involving bands like AJJ, Crywank, Jim Jones Drink Stop, Pigeon Pit, The Flatbottoms... (i think you catch the vibe) it leaves a lot of opportunity to study chord progression, strumming, fairly basic composition just through listening. And somehow this astounding woman, has gotten me to hear music in a new light. Hear each strum, each note in the chord, the way they ebb and flow, back tracking, the way instruments play off eachother.

Then she introduces me to Schoenbergs twelve-tone, and Mozarts musical dice game. Two methods of algorithmically composing pieces via predefined rulesets. And with those, theory began clicking.

So I figured it's time to start learning what the hell any of it means.

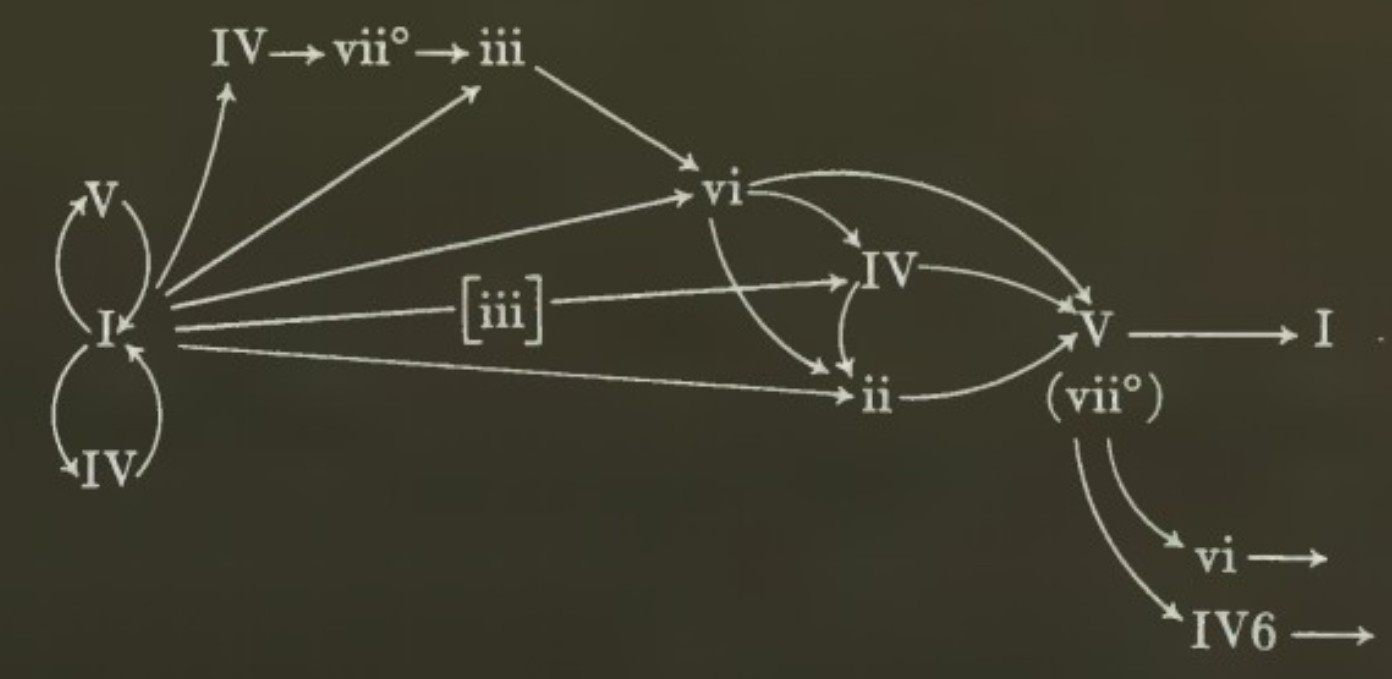

Did you know there's flowcharts for music concepts? Chord progressions and whatnot. Who knew actual data visualization could be done from the programmatic language of music. But that's what I needed to make things start clicking, to resolve damn near twenty years of being completely and totally lost. That, and a woman who truly makes me want to further my skillsets and be a better me. Anyways I'm babbling.

This is the image that made things start clicking for me. And almost immediately after seeing it, I wanted to code it. This ebb and flow is begging to be put into code, fuck if I know what the numerals mean or how or why things transition, that can all come later. For now, just slap it into a codebase and see what happens.

NodeJS has been my language of choice for a while now, coming from a background of lower end languages like C and Java. It just.. works, ya'know? And isn't the pain in the ass that python can be with whitespace. Pulled up vscode and started slapping out some variables. Made a basic mapping of the chart in the array below.

const chordMap = {

I: ["ii", "iii", "IV", "V", "vi"],

ii: ["V", "viip"],

iii: ["vi"],

IV: ["I", "ii", "V", "vii"],

V: ["I", "vi", "IV6"],

vi: ["ii", "IV", "V"],

viip: ["I", "vi", "IV6"],

IV6: ["I"]

}At a base level, this should function I thought to myself (and i mean, it can, should, just needs some logic applied when being utilized). I setup a basic express server, just an endpoint for generating things. Didn't mess with making an html page or anything, all I really care about is the data that comes out of this frankenstein of a script.

The general concept I had for this was to get a piece length from the request, as well as the key it should play in. Then build the chords for that scale, generate a progression of the desired length, and map the chords of that scale into that progression. Then write the whole thing out to a midi file because I hate my ears.

const key = req.query.key || "C";

const length = parseInt(req.query.length) || 6;

const chordsInKey = buildChords(scale);

const progression = generateProgression("I", length);

const chordNotes = progression.map(p => chordsInKey[p]);

const midiFile = writeMidiFile(chordNotes, "progression.mid");This... kind of worked? After a bit of weirdness with the notes I utilized (had to remove E# from the array, so rip generating midwest emo I suppose. may as well scrap the project actually...) I got some proper midi files generating, with music that.. mostly follows the goal?

As a base, this technically functions. Just not well.

The current core of this is the function I generate the midi files in (should probably split it up a bit but fuck it). It takes the chord array we generated just prior, and for each one (based on some predefined patterns) places either the low, mid, or top note of the chord, or plays a full chord. Randomizes velocity just to make it sound less... robotic? I guess? Idk just generally sounds better, altho that should be a bit adjusted to naturalize it. The bass notes are pretty simply always the low of the chord in an octave below the 4th (oh yeah, everything gets played in the 4th octave right now).

for (let chord of chordNotes) {

const midiChord = chord.map(n => n + "4");

const bassNote = chord[0] + "2";

left.addEvent(new MidiWriter.NoteEvent({

pitch: [bassNote],

duration: "2",

velocity: Math.floor(50 + Math.random() * 30)

}));

for (let p of pattern) {

let notes;

switch (p.part) {

case "low": notes = [midiChord[0]]; break;

case "mid": notes = [midiChord[1]]; break;

case "top": notes = [midiChord[2]]; break;

default: notes = midiChord; break;

}

right.addEvent(new MidiWriter.NoteEvent({

pitch: notes,

duration: p.dur,

velocity: Math.floor(60 + Math.random() * 40)

}));

}

}Timing for notes comes from the predefined patterns, one of which gets utilized throughout the entire piece. For example this one is entirely played in 4th's.

[

{ dur: "4", part: "low" },

{ dur: "4", part: "mid" },

{ dur: "4", part: "top" },

{ dur: "4", part: "full" }

]And thats.. pretty much all I've done so far. Functionally, this script takes any given note, generates a major scale from it, builds the chords for that scale, generates a chord progression based on predefined rules, and applies the chords to that progression.

Moving forward, I'm going to learn more on what each portions of the flowchart mean, and what it's actually doing and utilizing in order to make proper pieces. As I learn that, I'll be implementing the concepts into the code, likely the `generateProgression()` function, to create pieces that ebb and flow more naturally.

What have I learned from this?

That, luckily, music can in fact be seen from the perspective of a programmatic language. There's definitely flaws in doing so, and there's so, so, so much that goes into actual art pieces that can never be put into code. But, as stands, I've effectively broken into music theory the same way I would any new programming language. And through that, I'm slowly breaking down the barriers that have prevented me from properly understanding and conceptualizing music theory, and creation. Hopefully the end result of this project is a neat niche generator that allows me to compose pieces through algorithmic means.